Definition

The literal meaning of “eigen” is characteristic which comes from the German language. These vectors are aptly named as these vectors ultimately give the trend something follows. Any matrix that has an eigenvector, all its coordinates will ultimately follow a relation as close to the eigenvector as possible. [1]

Who

For those who learned about eigenvalues and eigenvectors in a linear algebra course and presentation of the material may have been very dry and mathematical. So you do not understand the usefulness of eigenvectors and eigenvalues. You are not alone. Here is what some have said about learning linear algebra:

I am teaching Mathematics in an engineering college. We just teach students how to solve the problems but not why it is essential and where it is applied in the real life. But this video is just more than what I expect to teach to my students. Thank you for this wonderful video and visual demonstration on eigenvectors. [3]

In my freshman year of college, Linear Algebra was part of the first topics taken in Engineering Mathematics. I always skipped the section of Eigenvectors and Eigenvalues, due to poor understanding and didn’t see much use of it. In my recent research, I’ve come to see the practical application of them. [4]

I wish I had this in college. I struggled with this subject so much. [5]

What

Eigenvalue is a mathematical concept, and therefore isn’t confined to any one specific application. However, the whole business appears all over physics and engineering, and the precise “meaning” of an eigenvalue depends on the application.

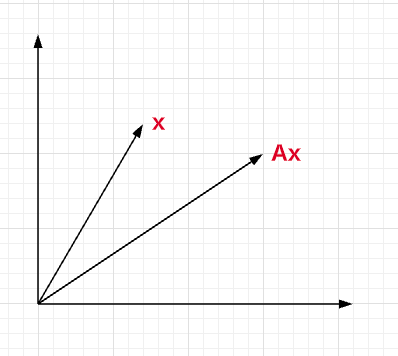

Consider an arbitrary vector in an N dimensional vector space. If you multiply that vector by an NxN matrix, you get some different vector – the matrix transforms the first vector into the second one. The matrix is a general representation of some linear transformation.

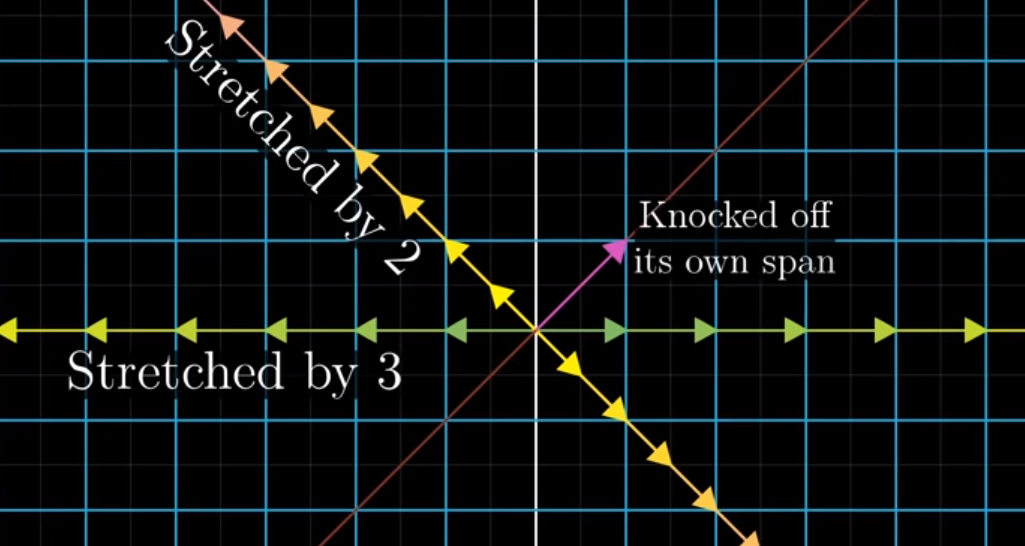

In general the second vector may have a different magnitude and a different direction from the first one. For certain specific vectors, though, the transformation changes only the magnitude – the output vector is parallel to the input vector. Such vectors are called eigenvectors, and in an N-dimensional situation you can always find N linearly independent eigenvectors. These represent a possible basis of the vector space. Keep in mind that these eigenvectors are determined by the matrix.

So, if you multiply an eigenvector by the matrix, the output will have a different magnitude but the same direction – the output vector is proportional to the input vector, with a scalar constant of proportionality. That constant is the eigenvalue associated with that particular eigenvector.

So, an eigenvalue is just the “magnitude part” of a linear transformation.

In some applications the eigenvalues are related to frequencies. As noted above, though, the precise physical meaning is determined by the physical arena of application under study. [10]

⭐ Eigenvalues and eigenvectors are among the most captivating concepts in linear algebra. In simple terms, eigenvalues represent the extent to which a matrix “stretches” or “compresses” certain specific vectors, which are known as eigenvectors. They play a vital role in understanding and interpreting the behavior of matrices, both in theoretical mathematics and in practical applications such as physics, engineering, and computer science. [11]

Eigenvectors and eigenvalues show us the general trend of how a system changes. The eigenvalues show us the magnitude of the rate of change of the system and the eigenvectors shows us the direction that change is taking place in. [1]

Eigenvectors are the vectors which when multiplied by a matrix (linear combination or transformation) results in another vector having same direction but scaled (hence scaler multiple) in forward or reverse direction by a magnitude of the scaler multiple which can be termed as eigenvalue. In simpler words, eigenvalue can be seen as the scaling factor for eigenvectors. Here is the formula for what is called eigenequation.

Ax=λx

In the above equation, the matrix A acts on the vector x and the outcome is another vector Ax having same direction as original vector x but scaled / shrunk in forward or reverse direction by a magnitude of scaler multiple, λ. The vector x is called as eigenvector of A and λ is called its eigenvalue. Let’s understand what pictorially what happens when a matrix A acts on a vector x. Note that the new vector Ax has different direction than vector x. [2]

What are Eigenvectors?

We know that vectors have both magnitude and direction when plotted on an XY (2-dim) plane. As required for this article, linear transformation of a vector, is the multiplication of a vector with a matrix that changes the basis of the vector and also its direction.

What are Eigenvalues?

They’re simply the constants that increase or decrease the Eigenvectors along their span when transformed linearly. Think of Eigenvectors and Eigenvalues as summary of a large matrix. [4]

Generally speaking, eigenvalues and eigenvectors allow us to “reduce” a linear operation to separate, simpler, problems. For example, if a stress is applied to a “plastic” solid, the deformation can be dissected into “principle directions”- those directions in which the deformation is greatest. Vectors in the principle directions are the eigenvectors and the percentage deformation in each principle direction is the corresponding eigenvalue. [6]

To explain eigenvalues, we first explain eigenvectors. Almost all vectors change direction, when they are multiplied by A. Certain exceptional vectors x are in the same direction as Ax. Those are the “eigenvectors”. Multiply an eigenvector by A, and the vector Ax is a number λ times the original x.

The basic equation is Ax = λx. The number λ is an eigenvalue of A.

The eigenvalue λ tells whether the special vector x is stretched or shrunk or reversed or left unchanged—when it is multiplied by A. We may find λ = 2 or 1/2 or −1 or 1. The eigenvalue λ could be zero! Then Ax = 0x means that this eigenvector x is in the nullspace. [7]

The eigenvector and eigenvalue represent the “axes” of the transformation. [8]

Why

We often want to transform our data to reduce the number of features while preserving as much variance (i.e., the differences among our samples) as we can. Often, you’ll hear folks refer to principal component analysis (PCA) and singular value decomposition (SVD), but we can’t appreciate how these methods work without first understanding what eigenvectors and eigenvalues are. [9]

The eigenvectors of a linear transform are those vectors that remain pointed in the same directions. For these vectors, the effect of the transform matrix is just scalar multiplication. For each eigenvector, the eigenvalue is the scalar that the vector is scaled by under the transform.

Peter Barrett Bryan

See Theoretical Knowledge Vs Practical Application.

How

I don’t show you how to to compute eigenvalues and eigenvectors. Many of the References, Additional Reading, websites and YouTube videos will assist you with understanding and applying eignvectors and eigenvalues.

As some professors say: “It is intuitively obvious to even the most casual observer.”

Application

Powell, Victor and Lewis Lehe. 2024. “Eigenvectors and Eigenvalues Explained Visually.” Steady States. Explained Visually. Accessed June 13. https://setosa.io/ev/eigenvectors-and-eigenvalues/.

Suppose that, every year, a fraction p of New Yorkers move to California and a fraction q of Californians move to New York. Drag the circles in the image on the page to decide these fractions and the number starting in each state.

See also Andrew Chamberlain, Ph.D. 2017. “Using Eigenvectors to Find Steady State Population Flows.” Medium. Medium. October 4. https://medium.com/@andrew.chamberlain/using-eigenvectors-to-find-steady-state-population-flows-cd938f124764.

Principal Component Analysis 4 Dummies: Eigenvectors, Eigenvalues and Dimension Reduction. 2013. https://georgemdallas.wordpress.com/2013/10/30/principal-component-analysis-4-dummies-eigenvectors-eigenvalues-and-dimension-reduction/.

But what do these eigenvectors represent in real life? The old axes were well defined (age and hours on internet, or any 2 things that you’ve explicitly measured), whereas the new ones are not. This is where you need to think. There is often a good reason why these axes represent the data better, but maths won’t tell you why, that’s for you to work out.

Music

All music is just eigenvalues and eigenvectors. The strings of a guitar, a sitar or a santoor – they resonate at their eigenvalue frequencies. The membranes of percussion instruments like the Indian tabla, drums, etc. resonate at their eigenvalues and move according to the two dimensional eigenvectors.

Statistics

Eigenvectors of your data set matrix correspond to directions of maximum variance, ordered in decreasing marginal increase in variance by decreasing corresponding eigenvalues. This is the main idea behind principal component analysis (PCA), a dimensionality reduction trick often used in machine learning and AI.

Control Theory

Eigenvalues of the system matrix of a linear system tell you information about the stability and response of your system. For a continuous system, the system is stable if all eigenvalues have negative real part (located in the left half complex plane). For a discrete system, the system is stable if all eigenvalues have magnitude less than 1 (inside the unit circle in the complex plane)

Graphs

Eigenvalues of matrices associated with graphs, like the adjacency matrix and the Laplacian matrix. They relate to various structural properties of the graph. For instance, the number of 0 eigenvalues of the Laplacian matrix is equal to the number of components of the graph. The number of distinct eigenvalues of the adjacency matrix is a lower bound for one plus the diameter of the graph (the size of the longest path of the graph), and so on.

Finance

The eigenvalues and eigenvectors of a matrix are often used in the analysis of financial data and are integral in extracting useful information from the raw data. They can be used for predicting stock prices and analyzing correlations between various stocks, corresponding to different companies. They can be used for analyzing risks. There is a branch of Mathematics, known as Random Matrix Theory, which deals with properties of eigenvalues and eigenvectors, that has extensive applications in Finance, Risk Management, Meteorological studies, Nuclear Physics, etc.

Google Search Results

The searching algorithm of Google diagonalizes a giant matrix and with the SVD (Singular value decomposition) method, and according to the eigenvalue assigned to every website, it shows you the best results. See the paper about this here: http://www.rose-hulman.edu/~bryan/googleFinalVersionFixed.pdf

Quantum Mechanics

Eigenvalues are possible measurement results of an observable represented by an operator.

Classical Mechanics

Eigenvectors of the moment of inertia tensor represent the “main axes” around which a solid body can stably rotate, and the corresponding eigenvalues are scalar moments of inertia along those axes.

References

[1] Eigenvectors and Eigenvalues: A deeper understanding. 2021. https://medium.com/analytics-vidhya/eigenvectors-and-eigenvalues-a-deeper-understanding-c715f8ded4c7.

[2] Kumar, Ajitesh. 2020. “Why & When To Use Eigenvalues & Eigenvectors? – Data Analytics”. Data Analytics. https://vitalflux.com/why-when-use-eigenvalue-eigenvector/.

[3] Real life example of Eigen values and Eigen vectors. 2021. youtube.com. https://www.youtube.com/watch?v=R13Cwgmpuxc.

[4] “Understanding The Role Of Eigenvectors And Eigenvalues In PCA Dimensionality Reduction.”. 2019. Medium. https://medium.com/@dareyadewumi650/understanding-the-role-of-eigenvectors-and-eigenvalues-in-pca-dimensionality-reduction-10186dad0c5c.

[5] Eigenvectors and eigenvalues | Chapter 14, Essence of linear algebra. 2021. youtube.com. https://www.youtube.com/watch?v=PFDu9oVAE-g.

[6] Algebra, Linear, Cantab Morgan, and Linear Algebra. 2009. “Practical Uses For Eigenvalues”. Physics Forums | Science Articles, Homework Help, Discussion. https://www.physicsforums.com/threads/practical-uses-for-eigenvalues.312625/.

[7] “Introduction To Linear Algebra, 5Th Edition”. 2021. math.mit.edu. http://math.mit.edu/~gs/linearalgebra/.

[8] “An Intuitive Guide To Linear Algebra – BetterExplained”. 2021. betterexplained.Com. https://betterexplained.com/articles/linear-algebra-guide/.

[9] Bryan, Peter Barrett. “Eigen Intuitions: Understanding Eigenvectors And Eigenvalues”. 2022. Medium. https://towardsdatascience.com/eigen-intuitions-understanding-eigenvectors-and-eigenvalues-630e9ef1f719.

[10] Ingram, Kip. “What Do Eigenvalues Tell Us?”. 2023. Quora. https://qr.ae/pyWvCm.

[11] ⭐ Zhang, Renda. 2023. “A Journey into Linear Algebra: The Intrigues of Eigenvalues and Eigenvectors.” Medium. Medium. December 17. https://rendazhang.medium.com/a-journey-into-linear-algebra-the-intrigues-of-eigenvalues-and-eigenvectors-a230306674c6.

Additional Reading

See Eigenvectors and Eigenvalues Additional Reading.

Videos

⭐ I suggest that you read the entire reference. Other references can be read in their entirety but I leave that up to you.

The featured image on this page is from the NapsterInBlue website.